C++ API and example¶

1. Introduction¶

This page exist in order to extract the examples from the Doxygen documentation, Please have look at the end of this page there are all the examples.

2. C++ API and example¶

-

class BaseCubeModel : public virtual trifinger_object_tracking::BaseCuboidModel, public virtual trifinger_object_tracking::BaseCuboidModel

- #include <cube_model.hpp>

Base model for all 65mm cubes.

Subclassed by trifinger_object_tracking::CubeV1Model, trifinger_object_tracking::CubeV1Model, trifinger_object_tracking::CubeV2Model, trifinger_object_tracking::CubeV2Model, trifinger_object_tracking::CubeV3Model, trifinger_object_tracking::CubeV3Model

Public Functions

-

inline virtual CornerPositionArray get_corners() const override

Get homogeneous coordinates (x, y, z, 1) of the cube corners.

-

inline virtual CornerPositionArray get_corners() const override

Get homogeneous coordinates (x, y, z, 1) of the cube corners.

Public Static Attributes

-

static constexpr float WIDTH = 0.0652

-

static constexpr float HALF_WIDTH = WIDTH / 2.0

-

static constexpr CornerPositionArray cube_corners{{{+HALF_WIDTH, -HALF_WIDTH, +HALF_WIDTH, 1}, {+HALF_WIDTH, +HALF_WIDTH, +HALF_WIDTH, 1}, {-HALF_WIDTH, +HALF_WIDTH, +HALF_WIDTH, 1}, {-HALF_WIDTH, -HALF_WIDTH, +HALF_WIDTH, 1}, {+HALF_WIDTH, -HALF_WIDTH, -HALF_WIDTH, 1}, {+HALF_WIDTH, +HALF_WIDTH, -HALF_WIDTH, 1}, {-HALF_WIDTH, +HALF_WIDTH, -HALF_WIDTH, 1}, {-HALF_WIDTH, -HALF_WIDTH, -HALF_WIDTH, 1}}}

Cube corner positions in cube frame.

-

inline virtual CornerPositionArray get_corners() const override

-

class BaseCuboidModel

- #include <cube_model.hpp>

Base class for all cuboid object models.

See Object Model for more information on the available object models.

Subclassed by trifinger_object_tracking::BaseCubeModel, trifinger_object_tracking::BaseCubeModel, trifinger_object_tracking::CubeV2ColorOrderBase, trifinger_object_tracking::CubeV2ColorOrderBase, trifinger_object_tracking::Cuboid2x2x8V2Model, trifinger_object_tracking::Cuboid2x2x8V2Model

Public Types

-

typedef std::shared_ptr<BaseCuboidModel> Ptr

-

typedef std::shared_ptr<const BaseCuboidModel> ConstPtr

-

typedef std::array<std::array<float, 4>, 8> CornerPositionArray

-

typedef std::shared_ptr<BaseCuboidModel> Ptr

-

typedef std::shared_ptr<const BaseCuboidModel> ConstPtr

-

typedef std::array<std::array<float, 4>, 8> CornerPositionArray

Public Functions

-

virtual std::string get_name() const = 0

Get name of the model.

-

virtual CornerPositionArray get_corners() const = 0

Get homogeneous coordinates (x, y, z, 1) of the cube corners.

-

virtual CubeFace map_color_to_face(FaceColor color) const = 0

Get the cuboid face that has the specified colour.

-

virtual ColorModel get_color_model() const = 0

Get the colour model that is used for this object.

-

inline BaseCuboidModel()

-

inline std::array<unsigned int, 4> get_face_corner_indices(FaceColor color) const

Maps each color to the indices of the corresponding cube corners.

-

virtual std::string get_name() const = 0

Get name of the model.

-

virtual CornerPositionArray get_corners() const = 0

Get homogeneous coordinates (x, y, z, 1) of the cube corners.

-

virtual CubeFace map_color_to_face(FaceColor color) const = 0

Get the cuboid face that has the specified colour.

-

virtual ColorModel get_color_model() const = 0

Get the colour model that is used for this object.

-

inline BaseCuboidModel()

-

inline std::array<unsigned int, 4> get_face_corner_indices(FaceColor color) const

Maps each color to the indices of the corresponding cube corners.

Public Static Functions

-

static inline std::array<FaceColor, 6> get_colors()

Get face colours. The index in the list refers to the face index.

-

static inline std::string get_color_name(FaceColor color)

Get name of the given colour.

-

static inline std::array<int, 3> get_rgb(FaceColor color)

Get RGB value of the given colour.

Note that the RGB values returned by this function are only meant for visualisation purposes and do not necessarily represent the actual shade of the colour on the given object (e.g. the value returned for red is always (255, 0, 0), independent of the object.

-

static inline std::array<int, 3> get_hsv(FaceColor color)

Get HSV value of the given colour.

Like get_rgb() this is only for visualisation purposes and does not attempt to match the actual colour of the real object.

-

static inline std::array<FaceColor, 6> get_colors()

Get face colours. The index in the list refers to the face index.

-

static inline std::string get_color_name(FaceColor color)

Get name of the given colour.

-

static inline std::array<int, 3> get_rgb(FaceColor color)

Get RGB value of the given colour.

Note that the RGB values returned by this function are only meant for visualisation purposes and do not necessarily represent the actual shade of the colour on the given object (e.g. the value returned for red is always (255, 0, 0), independent of the object.

-

static inline std::array<int, 3> get_hsv(FaceColor color)

Get HSV value of the given colour.

Like get_rgb() this is only for visualisation purposes and does not attempt to match the actual colour of the real object.

Public Static Attributes

-

static constexpr unsigned int N_FACES = 6

Number of faces of the cuboid.

-

static constexpr std::array<std::array<unsigned int, 4>, N_FACES> face_corner_indices = {{{0, 1, 2, 3}, {4, 5, 1, 0}, {5, 6, 2, 1}, {6, 7, 3, 2}, {7, 4, 0, 3}, {7, 6, 5, 4}}}

For each cuboid face the indices of the corresponding corners.

-

static constexpr float face_normal_vectors[6][3] = {{0, 0, 1}, {1, 0, 0}, {0, 1, 0}, {-1, 0, 0}, {0, -1, 0}, {0, 0, -1}}

Normal vectors of all cuboid faces.

-

typedef std::shared_ptr<BaseCuboidModel> Ptr

-

class BaseObjectTrackerBackend

- #include <base_object_tracker_backend.hpp>

Base class for object tracker.

Implements the logic of putting the object pose to the time series. The method

update_pose()which retrieves the actual pose, needs to be implemented by the derived class.Subclassed by trifinger_object_tracking::FakeObjectTrackerBackend, trifinger_object_tracking::FakeObjectTrackerBackend, trifinger_object_tracking::SimulationObjectTrackerBackend, trifinger_object_tracking::SimulationObjectTrackerBackend

Public Functions

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

-

~BaseObjectTrackerBackend()

-

void stop()

-

void store_buffered_data(const std::string &filename) const

Store the content of the time series buffer to a file.

- Parameters:

filename – Path to the file. If it already exists, it will be overwritten.

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

-

~BaseObjectTrackerBackend()

-

void stop()

-

void store_buffered_data(const std::string &filename) const

Store the content of the time series buffer to a file.

- Parameters:

filename – Path to the file. If it already exists, it will be overwritten.

Protected Functions

-

virtual ObjectPose update_pose() = 0¶

Get a new estimate of the object pose.

-

virtual ObjectPose update_pose() = 0

Get a new estimate of the object pose.

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

-

class ColorSegmenter

- #include <color_segmenter.hpp>

Public Functions

-

ColorSegmenter(BaseCuboidModel::ConstPtr cube_model)

-

void detect_colors(const cv::Mat &image_bgr)

Detect colours in the image and create segmentation masks.

After calling this, dominant colours and segmentation masks are provided by get_dominant_colors() and get_mask().

- Parameters:

image_bgr –

-

cv::Mat get_mask(FaceColor color) const

Get mask of the specified color.

-

cv::Mat get_segmented_image() const

Get image visualizing the color segmentation.

-

cv::Mat get_image() const

Get the original image.

-

std::vector<FaceColor> get_dominant_colors() const

-

ColorSegmenter(BaseCuboidModel::ConstPtr cube_model)

-

void detect_colors(const cv::Mat &image_bgr)

Detect colours in the image and create segmentation masks.

After calling this, dominant colours and segmentation masks are provided by get_dominant_colors() and get_mask().

- Parameters:

image_bgr –

-

cv::Mat get_mask(FaceColor color) const

Get mask of the specified color.

-

cv::Mat get_segmented_image() const

Get image visualizing the color segmentation.

-

cv::Mat get_image() const

Get the original image.

-

std::vector<FaceColor> get_dominant_colors() const

-

ColorSegmenter(BaseCuboidModel::ConstPtr cube_model)

-

class CubeDetector

- #include <cube_detector.hpp>

Detect coloured cube in images from a three-camera setup.

Public Functions

-

CubeDetector(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_params)

- Parameters:

cube_model – The model that is used for detecting the cube.

camera_params – Calibration parameters of the cameras.

-

CubeDetector(BaseCuboidModel::ConstPtr cube_model, const std::array<std::string, N_CAMERAS> &camera_param_files)

- Parameters:

cube_model – The model that is used for detecting the cube.

camera_param_files – Paths to the camera calibration files.

-

ObjectPose detect_cube_single_thread(const std::array<cv::Mat, N_CAMERAS> &images)

-

ObjectPose detect_cube(const std::array<cv::Mat, N_CAMERAS> &images)

Detect cube in the given images.

- Parameters:

images – Images from cameras camera60, camera180, camera300.

- Returns:

Pose of the cube.

-

cv::Mat create_debug_image(bool fill_faces = false) const

Create debug image for the last call of detect_cube.

- Parameters:

fill_faces – If true, the cube is drawn with filled faces, otherwise only a wire frame is drawn.

- Returns:

Aggregate image showing different stages of the cube detection.

-

CubeDetector(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_params)

- Parameters:

cube_model – The model that is used for detecting the cube.

camera_params – Calibration parameters of the cameras.

-

CubeDetector(BaseCuboidModel::ConstPtr cube_model, const std::array<std::string, N_CAMERAS> &camera_param_files)

- Parameters:

cube_model – The model that is used for detecting the cube.

camera_param_files – Paths to the camera calibration files.

-

ObjectPose detect_cube_single_thread(const std::array<cv::Mat, N_CAMERAS> &images)

-

ObjectPose detect_cube(const std::array<cv::Mat, N_CAMERAS> &images)

Detect cube in the given images.

- Parameters:

images – Images from cameras camera60, camera180, camera300.

- Returns:

Pose of the cube.

-

cv::Mat create_debug_image(bool fill_faces = false) const

Create debug image for the last call of detect_cube.

- Parameters:

fill_faces – If true, the cube is drawn with filled faces, otherwise only a wire frame is drawn.

- Returns:

Aggregate image showing different stages of the cube detection.

Public Static Attributes

-

static constexpr unsigned int N_CAMERAS = 3

Private Members

-

BaseCuboidModel::ConstPtr cube_model_¶

-

std::array<ColorSegmenter, N_CAMERAS> color_segmenters_¶

-

PoseDetector pose_detector_¶

Private Static Functions

-

static ObjectPose convert_pose(const Pose &pose)¶

Convert Pose to ObjectPose.

-

static ObjectPose convert_pose(const Pose &pose)

Convert Pose to ObjectPose.

-

CubeDetector(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_params)

-

class CubeV1Model : public trifinger_object_tracking::BaseCubeModel, public trifinger_object_tracking::BaseCubeModel

- #include <cube_model.hpp>

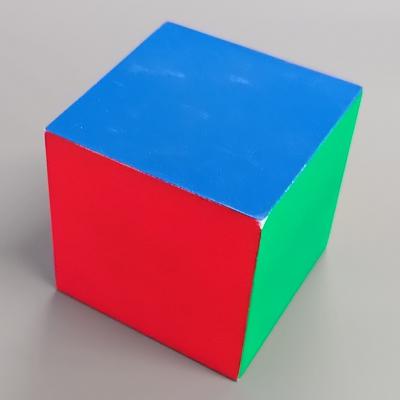

Model for 65mm cube version 1.

See Object Model for more information on the object model.

Public Functions

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual CubeFace map_color_to_face(FaceColor color) const override

Get the cuboid face that has the specified colour.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual CubeFace map_color_to_face(FaceColor color) const override

Get the cuboid face that has the specified colour.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

-

inline virtual std::string get_name() const override

-

class CubeV2ColorOrderBase : public virtual trifinger_object_tracking::BaseCuboidModel, public virtual trifinger_object_tracking::BaseCuboidModel

- #include <cube_model.hpp>

Subclassed by trifinger_object_tracking::CubeV2Model, trifinger_object_tracking::CubeV2Model, trifinger_object_tracking::CubeV3Model, trifinger_object_tracking::CubeV3Model, trifinger_object_tracking::Cuboid2x2x8V2Model, trifinger_object_tracking::Cuboid2x2x8V2Model

-

class CubeV2Model : public trifinger_object_tracking::BaseCubeModel, private trifinger_object_tracking::CubeV2ColorOrderBase, public trifinger_object_tracking::BaseCubeModel, private trifinger_object_tracking::CubeV2ColorOrderBase

- #include <cube_model.hpp>

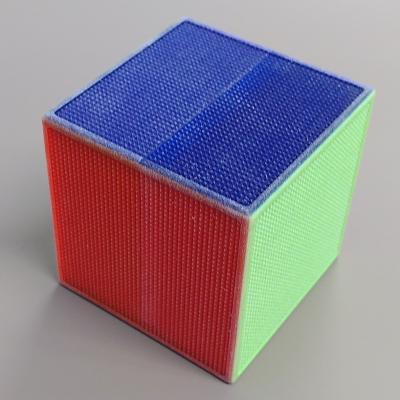

Model for 65mm cube version 2.

See Object Model for more information on the object model.

Public Functions

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

-

inline virtual std::string get_name() const override

-

class CubeV3Model : public trifinger_object_tracking::BaseCubeModel, private trifinger_object_tracking::CubeV2ColorOrderBase, public trifinger_object_tracking::BaseCubeModel, private trifinger_object_tracking::CubeV2ColorOrderBase

- #include <cube_model.hpp>

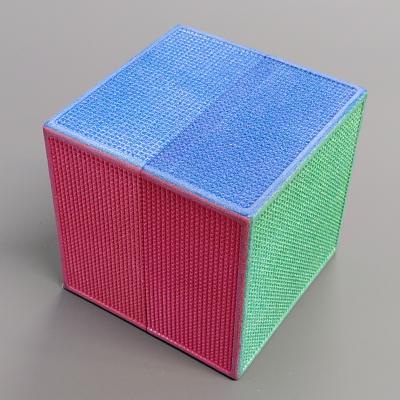

Model for 65mm cube version 3.

See Object Model for more information on the object model.

Public Functions

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

-

inline virtual std::string get_name() const override

-

class CubeVisualizer

- #include <cube_visualizer.hpp>

Visualise object pose in the camera images.

The draw_cube() method can be used to project the object into the camera images and draw it either as wireframe or with filled faces for visualisation/debugging purposes.

Public Functions

-

inline CubeVisualizer(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_params)

- Parameters:

cube_model – Model of the object that is visualised.

camera_params – Camera calibration parameters.

-

inline CubeVisualizer(BaseCuboidModel::ConstPtr cube_model, const std::array<std::string, N_CAMERAS> &camera_calib_files)

- Parameters:

cube_model – Model of the object that is visualised.

camera_calib_files – Paths to the camera parameter files.

-

std::array<cv::Mat, N_CAMERAS> draw_cube(const std::array<cv::Mat, N_CAMERAS> &images, const ObjectPose &object_pose, bool fill_faces = false, float opacity = 0.5)

Draw the object into the images at the specified pose.

- Parameters:

images – Images from the cameras. The images need to be ordered in the same way as the camera calibration parameters that are passed to the constructor.

object_pose – Pose of the object.

fill_faces – If false (default), only a monochrome wireframe of the object is drawn. If true, the faces of the cuboid are filled with the corresponding colours. The latter allows to verify the orientation (i.e. which face is pointing into which direction) but occludes more of the image.

opacity – Opacity of the visualisation (in range [0, 1]).

- Returns:

Images with the object visualisation.

-

std::array<cv::Mat, N_CAMERAS> draw_circle(const std::array<cv::Mat, N_CAMERAS> &images, const ObjectPose &object_pose, bool fill = true, float opacity = 0.5, float scale = 1.0)

Draw a circle into the images enclosing the object at the specified pose.

- Parameters:

images – Images from the cameras. The images need to be ordered in the same way as the camera calibration parameters that are passed to the constructor.

object_pose – Pose of the object.

fill – If true a filled circle is drawn with some transparency. If false, only a solid contour is drawn.

opacity – Opacity of the visualisation (in range [0, 1]).

scale – Scale factor for the circle size. At 1.0 the cube is just fully enclosed.

- Returns:

Images with the object visualisation.

-

std::vector<std::vector<cv::Point2f>> get_projected_points(const ObjectPose &object_pose)

Get projected corner points for the given object pose.

-

inline void set_line_thickness(int line_thickness)

Set line thickness for wireframe cube.

-

inline CubeVisualizer(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_params)

- Parameters:

cube_model – Model of the object that is visualised.

camera_params – Camera calibration parameters.

-

inline CubeVisualizer(BaseCuboidModel::ConstPtr cube_model, const std::array<std::string, N_CAMERAS> &camera_calib_files)

- Parameters:

cube_model – Model of the object that is visualised.

camera_calib_files – Paths to the camera parameter files.

-

std::array<cv::Mat, N_CAMERAS> draw_cube(const std::array<cv::Mat, N_CAMERAS> &images, const ObjectPose &object_pose, bool fill_faces = false, float opacity = 0.5)

Draw the object into the images at the specified pose.

- Parameters:

images – Images from the cameras. The images need to be ordered in the same way as the camera calibration parameters that are passed to the constructor.

object_pose – Pose of the object.

fill_faces – If false (default), only a monochrome wireframe of the object is drawn. If true, the faces of the cuboid are filled with the corresponding colours. The latter allows to verify the orientation (i.e. which face is pointing into which direction) but occludes more of the image.

opacity – Opacity of the visualisation (in range [0, 1]).

- Returns:

Images with the object visualisation.

-

std::array<cv::Mat, N_CAMERAS> draw_circle(const std::array<cv::Mat, N_CAMERAS> &images, const ObjectPose &object_pose, bool fill = true, float opacity = 0.5, float scale = 1.0)

Draw a circle into the images enclosing the object at the specified pose.

- Parameters:

images – Images from the cameras. The images need to be ordered in the same way as the camera calibration parameters that are passed to the constructor.

object_pose – Pose of the object.

fill – If true a filled circle is drawn with some transparency. If false, only a solid contour is drawn.

opacity – Opacity of the visualisation (in range [0, 1]).

scale – Scale factor for the circle size. At 1.0 the cube is just fully enclosed.

- Returns:

Images with the object visualisation.

-

std::vector<std::vector<cv::Point2f>> get_projected_points(const ObjectPose &object_pose)

Get projected corner points for the given object pose.

-

inline void set_line_thickness(int line_thickness)

Set line thickness for wireframe cube.

Public Static Attributes

-

static constexpr unsigned int N_CAMERAS = 3

Private Functions

-

void draw_filled_cube(cv::Mat &image, size_t camera_index, const PoseDetector &pose_detector, const std::vector<cv::Point2f> &imgpoints)¶

Draw cube with filled faces in the given image.

-

void draw_cube_wireframe(cv::Mat &image, size_t camera_index, const std::vector<cv::Point2f> &imgpoints)¶

Draw wireframe cube in the given image.

-

void draw_filled_cube(cv::Mat &image, size_t camera_index, const PoseDetector &pose_detector, const std::vector<cv::Point2f> &imgpoints)

Draw cube with filled faces in the given image.

-

void draw_cube_wireframe(cv::Mat &image, size_t camera_index, const std::vector<cv::Point2f> &imgpoints)

Draw wireframe cube in the given image.

Private Members

-

BaseCuboidModel::ConstPtr cube_model_¶

-

PoseDetector pose_detector_¶

-

int line_thickness_ = 2¶

-

inline CubeVisualizer(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_params)

-

class Cuboid2x2x8V2Model : public virtual trifinger_object_tracking::BaseCuboidModel, private trifinger_object_tracking::CubeV2ColorOrderBase, public virtual trifinger_object_tracking::BaseCuboidModel, private trifinger_object_tracking::CubeV2ColorOrderBase

- #include <cube_model.hpp>

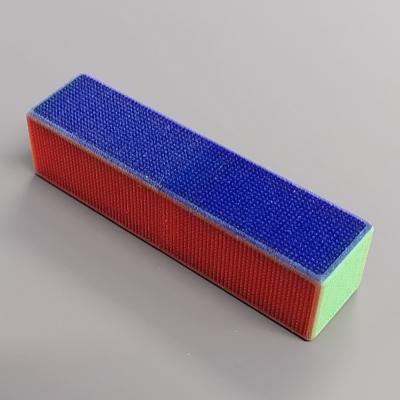

Model for the 2x2x8 cm cuboid version 2.

See Object Model for more information on the object model.

Public Functions

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual CornerPositionArray get_corners() const override

Get homogeneous coordinates (x, y, z, 1) of the cube corners.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

-

inline virtual std::string get_name() const override

Get name of the model.

-

inline virtual CornerPositionArray get_corners() const override

Get homogeneous coordinates (x, y, z, 1) of the cube corners.

-

inline virtual ColorModel get_color_model() const override

Get the colour model that is used for this object.

Public Static Attributes

-

static constexpr float LENGTH = 0.08

-

static constexpr float LONG_HALF_WIDTH = LENGTH / 2.0

-

static constexpr float WIDTH = 0.02

-

static constexpr float SHORT_HALF_WIDTH = WIDTH / 2.0

-

static constexpr CornerPositionArray cube_corners = {{{+SHORT_HALF_WIDTH, -LONG_HALF_WIDTH, +SHORT_HALF_WIDTH, 1}, {+SHORT_HALF_WIDTH, +LONG_HALF_WIDTH, +SHORT_HALF_WIDTH, 1}, {-SHORT_HALF_WIDTH, +LONG_HALF_WIDTH, +SHORT_HALF_WIDTH, 1}, {-SHORT_HALF_WIDTH, -LONG_HALF_WIDTH, +SHORT_HALF_WIDTH, 1}, {+SHORT_HALF_WIDTH, -LONG_HALF_WIDTH, -SHORT_HALF_WIDTH, 1}, {+SHORT_HALF_WIDTH, +LONG_HALF_WIDTH, -SHORT_HALF_WIDTH, 1}, {-SHORT_HALF_WIDTH, +LONG_HALF_WIDTH, -SHORT_HALF_WIDTH, 1}, {-SHORT_HALF_WIDTH, -LONG_HALF_WIDTH, -SHORT_HALF_WIDTH, 1}}}

Cube corner positions in cube frame.

-

inline virtual std::string get_name() const override

-

class CvSubImages

- #include <cv_sub_images.hpp>

Combine multiple images of same size in grid.

Creates an nxm grid of images, similar to subplots of matplotlib.

Public Functions

-

CvSubImages(cv::Size img_size, unsigned rows, unsigned cols, unsigned border = 5, cv::Scalar background = cv::Scalar(255, 255, 255))

- Parameters:

img_size – Size of the single images.

rows – Number of rows in the grid.

cols – Number of columns in the grid.

border – Size of the border between images in pixels.

background – Background colour.

-

void set_subimg(const cv::Mat &image, unsigned row, unsigned col)

Set a sub-image.

- Parameters:

image – The image.

row – Row in which the image is shown.

col – Column in which the image is shown.

-

const cv::Mat &get_image() const

Get the image grid.

-

CvSubImages(cv::Size img_size, unsigned rows, unsigned cols, unsigned border = 5, cv::Scalar background = cv::Scalar(255, 255, 255))

- Parameters:

img_size – Size of the single images.

rows – Number of rows in the grid.

cols – Number of columns in the grid.

border – Size of the border between images in pixels.

background – Background colour.

-

void set_subimg(const cv::Mat &image, unsigned row, unsigned col)

Set a sub-image.

- Parameters:

image – The image.

row – Row in which the image is shown.

col – Column in which the image is shown.

-

const cv::Mat &get_image() const

Get the image grid.

-

CvSubImages(cv::Size img_size, unsigned rows, unsigned cols, unsigned border = 5, cv::Scalar background = cv::Scalar(255, 255, 255))

-

struct Edge

- #include <cube_model.hpp>

Represents a cuboid edge, defined by its two corner points.

Public Members

-

unsigned int c1

Index of the first cuboid corner that belong to the edge.

-

unsigned int c2

Index of the second cuboid corner that belong to the edge.

-

unsigned int c1

-

class FakeObjectTrackerBackend : public trifinger_object_tracking::BaseObjectTrackerBackend, public trifinger_object_tracking::BaseObjectTrackerBackend

- #include <fake_object_tracker_backend.hpp>

Fake Object Tracker Backend for Testing.

This implementation of the backend returns fake poses (meant for testing the interface without real object tracker running).

Public Functions

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

Protected Functions

-

virtual ObjectPose update_pose() override¶

Get a new estimate of the object pose.

-

virtual ObjectPose update_pose() override

Get a new estimate of the object pose.

-

inline BaseObjectTrackerBackend(ObjectTrackerData::Ptr data)

-

struct ObjectPose

- #include <object_pose.hpp>

Estimated pose of a tracked object.

Public Functions

-

ObjectPose() = default

-

inline ObjectPose(const Eigen::Matrix4d &matrix, double confidence = 0.0)

-

inline Eigen::Quaterniond quaternion() const

Get the orientation as Eigen::Quaterniond.

-

inline Eigen::Affine3d affine() const

Get the pose as Eigen::Affine3d.

-

template<class Archive>

inline void serialize(Archive &archive) For serialization with cereal.

-

inline std::string to_string()

-

ObjectPose() = default

-

inline ObjectPose(const Eigen::Matrix4d &matrix, double confidence = 0.0)

-

inline Eigen::Quaterniond quaternion() const

Get the orientation as Eigen::Quaterniond.

-

inline Eigen::Affine3d affine() const

Get the pose as Eigen::Affine3d.

-

template<class Archive>

inline void serialize(Archive &archive) For serialization with cereal.

-

inline std::string to_string()

Public Members

-

Eigen::Vector3d position

Position (x, y, z)

-

Eigen::Vector4d orientation

Orientation quaternion (x, y, z, w)

-

double confidence = 0.0

Confidence of the accuracy of the given pose. Ranges from 0 to 1.

-

ObjectPose() = default

-

class ObjectTrackerData

- #include <object_tracker_data.hpp>

Wrapper for the time series of the object tracker data.

Public Types

-

typedef std::shared_ptr<ObjectTrackerData> Ptr

-

typedef std::shared_ptr<ObjectTrackerData> Ptr

Public Functions

-

ObjectTrackerData(const std::string &shared_memory_id_prefix, bool is_master, size_t history_length = 1000)

-

ObjectTrackerData(const std::string &shared_memory_id_prefix, bool is_master, size_t history_length = 1000)

Public Members

-

std::shared_ptr<time_series::TimeSeriesInterface<ObjectPose>> poses

-

typedef std::shared_ptr<ObjectTrackerData> Ptr

-

class ObjectTrackerFrontend

- #include <object_tracker_frontend.hpp>

Frontend to access the object tracker data.

Public Functions

-

inline ObjectTrackerFrontend(ObjectTrackerData::Ptr data)

-

ObjectPose get_pose(const time_series::Index t) const

Get the object pose at time index t.

-

ObjectPose get_current_pose() const

Get the latest object pose.

-

time_series::Index get_current_timeindex() const

Get the index of the current time step.

-

time_series::Index get_oldest_timeindex() const

Get the index of the oldest time step still held in the buffer.

-

time_series::Timestamp get_timestamp_ms(const time_series::Index t) const

Get time stamp of the given time step.

-

void wait_until_timeindex(const time_series::Index t) const

Wait until time index t is reached.

-

bool has_observations() const

Returns true if there are observations in the time series.

-

inline ObjectTrackerFrontend(ObjectTrackerData::Ptr data)

-

ObjectPose get_pose(const time_series::Index t) const

Get the object pose at time index t.

-

ObjectPose get_current_pose() const

Get the latest object pose.

-

time_series::Index get_current_timeindex() const

Get the index of the current time step.

-

time_series::Index get_oldest_timeindex() const

Get the index of the oldest time step still held in the buffer.

-

time_series::Timestamp get_timestamp_ms(const time_series::Index t) const

Get time stamp of the given time step.

-

void wait_until_timeindex(const time_series::Index t) const

Wait until time index t is reached.

-

bool has_observations() const

Returns true if there are observations in the time series.

Private Members

-

ObjectTrackerData::Ptr data_¶

-

inline ObjectTrackerFrontend(ObjectTrackerData::Ptr data)

-

struct Pose

- #include <types.hpp>

Public Functions

-

inline Pose(const cv::Vec3f &translation, const cv::Vec3f &rotation, float confidence = 0.0)

-

inline Pose(const cv::Vec3f &translation, const cv::Vec3f &rotation, float confidence = 0.0)

Public Members

-

cv::Vec3f translation

-

cv::Vec3f rotation

rotation vector

-

float confidence = 0.0

-

inline Pose(const cv::Vec3f &translation, const cv::Vec3f &rotation, float confidence = 0.0)

-

class PoseDetector

- #include <pose_detector.hpp>

Public Types

-

typedef std::array<std::vector<std::vector<cv::Point>>, PoseDetector::N_CAMERAS> MasksPixels

-

typedef std::array<std::vector<std::vector<cv::Point>>, PoseDetector::N_CAMERAS> MasksPixels

Public Functions

-

PoseDetector(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_parameters)

-

Pose find_pose(const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const std::array<std::vector<cv::Mat>, N_CAMERAS> &masks)

-

std::vector<std::vector<cv::Point2f>> get_projected_points() const

-

std::vector<std::pair<FaceColor, std::array<unsigned int, 4>>> get_visible_faces(unsigned int camera_idx, const cv::Affine3f &cube_pose_world) const

Get corner indices of the visible faces.

Determines which faces of the object is visible to the camera and returns the corner indices of these faces.

- Parameters:

camera_idx – Index of the camera.

cube_pose_world – Pose of the cube in the world frame.

- Returns:

For each visible face a pair of the face color and the list of the corner indices of the four corners of that face.

-

std::vector<std::pair<FaceColor, std::array<unsigned int, 4>>> get_visible_faces(unsigned int camera_idx) const

Get corner indices of the visible faces.

Overloaded version that used the last detected pose for the cube. Call find_pose() first, otherwise the result is undefined!

- Parameters:

camera_idx – Index of the camera.

- Returns:

For each visible face a pair of the face color and the list of the corner indices of the four corners of that face.

-

inline unsigned int get_num_misclassified_pixels() const

-

inline float get_segmented_pixels_ratio() const

-

inline float get_confidence() const

-

void set_pose(const Pose &pose)

-

PoseDetector(BaseCuboidModel::ConstPtr cube_model, const std::array<trifinger_cameras::CameraParameters, N_CAMERAS> &camera_parameters)

-

Pose find_pose(const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const std::array<std::vector<cv::Mat>, N_CAMERAS> &masks)

-

std::vector<std::vector<cv::Point2f>> get_projected_points() const

-

std::vector<std::pair<FaceColor, std::array<unsigned int, 4>>> get_visible_faces(unsigned int camera_idx, const cv::Affine3f &cube_pose_world) const

Get corner indices of the visible faces.

Determines which faces of the object is visible to the camera and returns the corner indices of these faces.

- Parameters:

camera_idx – Index of the camera.

cube_pose_world – Pose of the cube in the world frame.

- Returns:

For each visible face a pair of the face color and the list of the corner indices of the four corners of that face.

-

std::vector<std::pair<FaceColor, std::array<unsigned int, 4>>> get_visible_faces(unsigned int camera_idx) const

Get corner indices of the visible faces.

Overloaded version that used the last detected pose for the cube. Call find_pose() first, otherwise the result is undefined!

- Parameters:

camera_idx – Index of the camera.

- Returns:

For each visible face a pair of the face color and the list of the corner indices of the four corners of that face.

-

inline unsigned int get_num_misclassified_pixels() const

-

inline float get_segmented_pixels_ratio() const

-

inline float get_confidence() const

-

void set_pose(const Pose &pose)

Public Static Attributes

-

static constexpr unsigned int N_CAMERAS = 3

Number of cameras.

Private Functions

-

void optimize_using_optim(const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const std::array<std::vector<cv::Mat>, N_CAMERAS> &masks)¶

-

float cost_function(const cv::Vec3f &position, const cv::Vec3f &orientation, const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const MasksPixels &masks_pixels, const float distance_cost_scaling, const float invisibility_cost_scaling, const float height_cost_scaling)¶

-

float compute_confidence(const cv::Vec3f &position, const cv::Vec3f &orientation, const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const MasksPixels &masks_pixels)¶

-

void compute_color_visibility(const FaceColor &color, const cv::Mat &face_normals, const cv::Mat &cube_corners, bool *is_visible, float *face_normal_dot_camera_direction) const¶

-

void compute_face_normals_and_corners(const unsigned int camera_idx, const cv::Affine3f &cube_pose_world, cv::Mat *normals, cv::Mat *corners) const¶

-

bool is_face_visible(FaceColor color, unsigned int camera_idx, const cv::Affine3f &cube_pose_world, float *out_dot_product = nullptr) const¶

-

void optimize_using_optim(const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const std::array<std::vector<cv::Mat>, N_CAMERAS> &masks)

-

float cost_function(const cv::Vec3f &position, const cv::Vec3f &orientation, const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const MasksPixels &masks_pixels, const float distance_cost_scaling, const float invisibility_cost_scaling, const float height_cost_scaling)

-

float compute_confidence(const cv::Vec3f &position, const cv::Vec3f &orientation, const std::array<std::vector<FaceColor>, N_CAMERAS> &dominant_colors, const MasksPixels &masks_pixels)

-

void compute_color_visibility(const FaceColor &color, const cv::Mat &face_normals, const cv::Mat &cube_corners, bool *is_visible, float *face_normal_dot_camera_direction) const

-

void compute_face_normals_and_corners(const unsigned int camera_idx, const cv::Affine3f &cube_pose_world, cv::Mat *normals, cv::Mat *corners) const

-

bool is_face_visible(FaceColor color, unsigned int camera_idx, const cv::Affine3f &cube_pose_world, float *out_dot_product = nullptr) const

Private Members

-

BaseCuboidModel::ConstPtr cube_model_¶

-

cv::Mat corners_in_cube_frame_¶

-

cv::Mat reference_vector_normals_¶

-

const unsigned int num_total_pixels_in_image_¶

Total number of pixels in one image (i.e. width*height).

-

unsigned int num_misclassified_pixels_ = 0¶

Number of misclassified pixels in the last call of find_pose().

-

float segmented_pixels_ratio_ = 0¶

-

float confidence_ = 0.0¶

-

typedef std::array<std::vector<std::vector<cv::Point>>, PoseDetector::N_CAMERAS> MasksPixels

-

class PyBulletTriCameraObjectTrackerDriver : public robot_interfaces::SensorDriver<trifinger_object_tracking::TriCameraObjectObservation, trifinger_cameras::TriCameraInfo>, public robot_interfaces::SensorDriver<trifinger_object_tracking::TriCameraObjectObservation, trifinger_cameras::TriCameraInfo>

- #include <pybullet_tricamera_object_tracker_driver.hpp>

Driver to get rendered camera images and object pose from pyBullet.

This is a simulation-based replacement for TriCameraObjectTrackerDriver.

Public Functions

-

PyBulletTriCameraObjectTrackerDriver(pybind11::object tracking_object, robot_interfaces::TriFingerTypes::BaseDataPtr robot_data, bool render_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

- Parameters:

tracking_object – Python object of the tracked object. Needs to have a method

get_state()which returns a tuple of object position (x, y, z) and object orientation quaternion (x, y, z, w).render_images – Set to false to disable image rendering. Images in the observations will be uninitialized. Use this if you only need the object pose and are not interested in the actual images. Rendering is pretty slow, so by disabling it, the simulation may run much faster.

-

trifinger_cameras::TriCameraInfo get_sensor_info() override

Get the camera parameters.

Internally, this calls

trifinger_cameras::PyBulletTriCameraDriver::get_sensor_info(), so see there fore more information.

-

trifinger_object_tracking::TriCameraObjectObservation get_observation() override

Get the latest observation.

-

PyBulletTriCameraObjectTrackerDriver(pybind11::object tracking_object, robot_interfaces::TriFingerTypes::BaseDataPtr robot_data, bool render_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

- Parameters:

tracking_object – Python object of the tracked object. Needs to have a method

get_state()which returns a tuple of object position (x, y, z) and object orientation quaternion (x, y, z, w).render_images – Set to false to disable image rendering. Images in the observations will be uninitialized. Use this if you only need the object pose and are not interested in the actual images. Rendering is pretty slow, so by disabling it, the simulation may run much faster.

-

trifinger_cameras::TriCameraInfo get_sensor_info() override

Get the camera parameters.

Internally, this calls

trifinger_cameras::PyBulletTriCameraDriver::get_sensor_info(), so see there fore more information.

-

trifinger_object_tracking::TriCameraObjectObservation get_observation() override

Get the latest observation.

Private Members

-

trifinger_cameras::PyBulletTriCameraDriver camera_driver_¶

PyBullet driver instance for rendering images.

-

pybind11::object tracking_object_¶

Python object of the tracked object.

-

PyBulletTriCameraObjectTrackerDriver(pybind11::object tracking_object, robot_interfaces::TriFingerTypes::BaseDataPtr robot_data, bool render_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

-

class ScopedTimer

- #include <scoped_timer.hpp>

Public Functions

-

inline ScopedTimer(const std::string &name)

-

inline ~ScopedTimer()

-

inline ScopedTimer(const std::string &name)

-

inline ~ScopedTimer()

-

inline ScopedTimer(const std::string &name)

-

class SimulationObjectTrackerBackend : public trifinger_object_tracking::BaseObjectTrackerBackend, public trifinger_object_tracking::BaseObjectTrackerBackend

- #include <simulation_object_tracker_backend.hpp>

Object Tracker Backend for Simulation.

This implementation of the backend gets the object pose directly from simulation.

Public Functions

-

inline SimulationObjectTrackerBackend(ObjectTrackerData::Ptr data, pybind11::object object, bool real_time_mode = true)

Initialize.

- Parameters:

data – Instance of the ObjectTrackerData.

object – Python object that provides access to the object’s pose. It is expected to have a method “get_pose()” that returns a tuple of object position ([x, y, z]) and orientation as quaternion ([x, y, z, w]).

real_time_mode – If true, the object pose will be updated at 33Hz.

-

inline SimulationObjectTrackerBackend(ObjectTrackerData::Ptr data, pybind11::object object, bool real_time_mode = true)

Initialize.

- Parameters:

data – Instance of the ObjectTrackerData.

object – Python object that provides access to the object’s pose. It is expected to have a method “get_pose()” that returns a tuple of object position ([x, y, z]) and orientation as quaternion ([x, y, z, w]).

real_time_mode – If true, the object pose will be updated at 33Hz.

Protected Functions

-

virtual ObjectPose update_pose() override¶

Get a new estimate of the object pose.

-

virtual ObjectPose update_pose() override

Get a new estimate of the object pose.

-

inline SimulationObjectTrackerBackend(ObjectTrackerData::Ptr data, pybind11::object object, bool real_time_mode = true)

-

struct Stats

- #include <pose_detector.hpp>

Public Members

-

cv::Vec3f lower_bound

-

cv::Vec3f upper_bound

-

cv::Vec3f mean

-

cv::Vec3f lower_bound

-

struct TriCameraObjectObservation : public trifinger_cameras::TriCameraObservation, public trifinger_cameras::TriCameraObservation

- #include <tricamera_object_observation.hpp>

Observation of three cameras + object tracking.

Public Functions

-

TriCameraObjectObservation() = default

-

inline TriCameraObjectObservation(const trifinger_cameras::TriCameraObservation &observation)

-

template<class Archive>

inline void serialize(Archive &archive)

-

TriCameraObjectObservation() = default

-

inline TriCameraObjectObservation(const trifinger_cameras::TriCameraObservation &observation)

-

template<class Archive>

inline void serialize(Archive &archive)

Public Members

-

trifinger_object_tracking::ObjectPose object_pose = {}

-

trifinger_object_tracking::ObjectPose filtered_object_pose = {}

-

TriCameraObjectObservation() = default

-

class TriCameraObjectTrackerDriver : public robot_interfaces::SensorDriver<TriCameraObjectObservation, trifinger_cameras::TriCameraInfo>, public robot_interfaces::SensorDriver<TriCameraObjectObservation, trifinger_cameras::TriCameraInfo>

- #include <tricamera_object_tracking_driver.hpp>

Driver to create three instances of the PylonDriver and get observations from them.

Public Functions

-

TriCameraObjectTrackerDriver(const std::string &device_id_1, const std::string &device_id_2, const std::string &device_id_3, BaseCuboidModel::ConstPtr cube_model, bool downsample_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

- Parameters:

device_id_1 – device user id of first camera

device_id_2 – likewise, the 2nd’s

device_id_3 – and the 3rd’s

cube_model – The model that is used for detecting the cube.

downsample_images – If set to true (default), images are downsampled to half their original size for object tracking.

settings – Settings for the cameras.

-

TriCameraObjectTrackerDriver(const std::filesystem::path &camera_calibration_file_1, const std::filesystem::path &camera_calibration_file_2, const std::filesystem::path &camera_calibration_file_3, BaseCuboidModel::ConstPtr cube_model, bool downsample_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

- Parameters:

camera_calibration_file_1 – Calibration file of first camera

camera_calibration_file_2 – likewise, the 2nd’s

camera_calibration_file_3 – and the 3rd’s

cube_model – The model that is used for detecting the cube.

downsample_images – If set to true (default), images are downsampled to half their original size for object tracking.

settings – Settings for the cameras.

-

trifinger_cameras::TriCameraInfo get_sensor_info() override

Get the camera parameters.

Internally, this calls

trifinger_cameras::TriCameraDriver::get_sensor_info(), so see there fore more information.

-

TriCameraObjectObservation get_observation() override

Get the latest observation from the three cameras.

- Returns:

TricameraObservation

-

cv::Mat get_debug_image(bool fill_faces = false)

Fetch an observation from the cameras and create a debug image.

The debug image shows various visualisations of the object detection, see CubeDetector::create_debug_image.

See also

CubeDetector::create_debug_image

- Parameters:

fill_faces –

- Returns:

Debug image showing the result of the object detection.

-

TriCameraObjectTrackerDriver(const std::string &device_id_1, const std::string &device_id_2, const std::string &device_id_3, BaseCuboidModel::ConstPtr cube_model, bool downsample_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

- Parameters:

device_id_1 – device user id of first camera

device_id_2 – likewise, the 2nd’s

device_id_3 – and the 3rd’s

cube_model – The model that is used for detecting the cube.

downsample_images – If set to true (default), images are downsampled to half their original size for object tracking.

settings – Settings for the cameras.

-

TriCameraObjectTrackerDriver(const std::filesystem::path &camera_calibration_file_1, const std::filesystem::path &camera_calibration_file_2, const std::filesystem::path &camera_calibration_file_3, BaseCuboidModel::ConstPtr cube_model, bool downsample_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

- Parameters:

camera_calibration_file_1 – Calibration file of first camera

camera_calibration_file_2 – likewise, the 2nd’s

camera_calibration_file_3 – and the 3rd’s

cube_model – The model that is used for detecting the cube.

downsample_images – If set to true (default), images are downsampled to half their original size for object tracking.

settings – Settings for the cameras.

-

trifinger_cameras::TriCameraInfo get_sensor_info() override

Get the camera parameters.

Internally, this calls

trifinger_cameras::TriCameraDriver::get_sensor_info(), so see there fore more information.

-

TriCameraObjectObservation get_observation() override

Get the latest observation from the three cameras.

- Returns:

TricameraObservation

-

cv::Mat get_debug_image(bool fill_faces = false)

Fetch an observation from the cameras and create a debug image.

The debug image shows various visualisations of the object detection, see CubeDetector::create_debug_image.

See also

CubeDetector::create_debug_image

- Parameters:

fill_faces –

- Returns:

Debug image showing the result of the object detection.

Public Static Attributes

-

static constexpr std::chrono::milliseconds rate = std::chrono::milliseconds(100)

Rate at which images are acquired.

-

static constexpr unsigned int N_CAMERAS = 3

Private Members

-

bool downsample_images_ = true¶

-

trifinger_cameras::TriCameraDriver camera_driver_¶

-

trifinger_object_tracking::CubeDetector cube_detector_¶

-

ObjectPose previous_pose_¶

-

TriCameraObjectTrackerDriver(const std::string &device_id_1, const std::string &device_id_2, const std::string &device_id_3, BaseCuboidModel::ConstPtr cube_model, bool downsample_images = true, trifinger_cameras::Settings settings = trifinger_cameras::Settings())

-

namespace literals

-

namespace robot_interfaces

-

namespace trifinger_cameras

-

namespace trifinger_object_tracking

Enums

-

enum class ColorModel

Different color segmentation models that are supported.

Names correspond to the objects, see the documentation of supported objects for more information.

Values:

-

enumerator CUBE_V1

-

enumerator CUBE_V2

-

enumerator CUBOID_V2

Cuboid v2 is the same material as cube v2 but this color model is fine-tuned with images of the cuboid.

-

enumerator CUBE_V1

-

enumerator CUBE_V2

-

enumerator CUBOID_V2

Cuboid v2 is the same material as cube v2 but this color model is fine-tuned with images of the cuboid.

-

enumerator CUBE_V1

-

enum CubeFace

Values:

-

enumerator FACE_0

-

enumerator FACE_1

-

enumerator FACE_2

-

enumerator FACE_3

-

enumerator FACE_4

-

enumerator FACE_5

-

enumerator FACE_0

-

enumerator FACE_1

-

enumerator FACE_2

-

enumerator FACE_3

-

enumerator FACE_4

-

enumerator FACE_5

-

enumerator FACE_0

-

enum FaceColor

Values:

-

enumerator RED

-

enumerator GREEN

-

enumerator BLUE

-

enumerator CYAN

-

enumerator MAGENTA

-

enumerator YELLOW

-

enumerator N_COLORS

-

enumerator RED

-

enumerator GREEN

-

enumerator BLUE

-

enumerator CYAN

-

enumerator MAGENTA

-

enumerator YELLOW

-

enumerator N_COLORS

-

enumerator RED

-

enum class ColorModel

Different color segmentation models that are supported.

Names correspond to the objects, see the documentation of supported objects for more information.

Values:

-

enumerator CUBE_V1

-

enumerator CUBE_V2

-

enumerator CUBOID_V2

Cuboid v2 is the same material as cube v2 but this color model is fine-tuned with images of the cuboid.

-

enumerator CUBE_V1

-

enumerator CUBE_V2

-

enumerator CUBOID_V2

Cuboid v2 is the same material as cube v2 but this color model is fine-tuned with images of the cuboid.

-

enumerator CUBE_V1

-

enum CubeFace

Values:

-

enumerator FACE_0

-

enumerator FACE_1

-

enumerator FACE_2

-

enumerator FACE_3

-

enumerator FACE_4

-

enumerator FACE_5

-

enumerator FACE_0

-

enumerator FACE_1

-

enumerator FACE_2

-

enumerator FACE_3

-

enumerator FACE_4

-

enumerator FACE_5

-

enumerator FACE_0

-

enum FaceColor

Values:

-

enumerator RED

-

enumerator GREEN

-

enumerator BLUE

-

enumerator CYAN

-

enumerator MAGENTA

-

enumerator YELLOW

-

enumerator N_COLORS

-

enumerator RED

-

enumerator GREEN

-

enumerator BLUE

-

enumerator CYAN

-

enumerator MAGENTA

-

enumerator YELLOW

-

enumerator N_COLORS

-

enumerator RED

Functions

-

std::array<float, XGB_NUM_CLASSES> xgb_classify_cube_v1(std::array<float, XGB_NUM_FEATURES> &sample)

-

std::array<float, XGB_NUM_CLASSES> xgb_classify_cube_v2(std::array<float, XGB_NUM_FEATURES> &sample)

-

std::array<float, XGB_NUM_CLASSES> xgb_classify_cuboid_v2(std::array<float, XGB_NUM_FEATURES> &sample)

-

inline std::array<float, XGB_NUM_CLASSES> xgb_classify(const ColorModel color_model, std::array<float, XGB_NUM_FEATURES> &sample)

-

CubeDetector create_trifingerpro_cube_detector(BaseCuboidModel::ConstPtr cube_model, bool downsample_images = true)

Create cube detector for a TriFingerPro robot.

Loads the camera calibration of the robot on which it is executed and creates a CubeDetector instance for it.

- Parameters:

cube_model – The model that is used for detecting the cube.

downsample_images – If set to true (default), images are downsampled to half their original size for object tracking.

-

std::ostream &operator<<(std::ostream &os, const FaceColor &color)

-

BaseCuboidModel::ConstPtr get_model_by_name(const std::string &name)

Get object model instance by name.

- Parameters:

name – Name of the model type. One of cube_v1, cube_v2, cube_v3, cuboid_2x2x8_v2 (same names as returned by BaseCuboidModel::get_name()).

- Returns:

Instance of the specified model.

-

cv::Mat getPoseMatrix(cv::Point3f, cv::Point3f)

-

std::array<trifinger_cameras::CameraParameters, 3> load_camera_parameters(std::array<std::string, 3> parameter_files)

Load camera calibration parameters from YAML files.

- Parameters:

parameter_files – List of YAML files. Expected order is camera60, camera180, camera300.

- Throws:

std::runtime_error – if loading of the parameters fails.

- Returns:

Camera calibration parameters.

-

std::array<float, XGB_NUM_CLASSES> xgb_classify(const std::array<float, XGB_NUM_FEATURES> &sample)

-

cv::Mat segment_image(const cv::Mat &image_bgr)

Segment image using a binary pixel classifier.

- Parameters:

image_bgr – The image in BGR format.

- Returns:

Single-channel segmentation mask.

Variables

-

constexpr int XGB_NUM_CLASSES = 7

-

constexpr int XGB_NUM_FEATURES = 6

-

enum class ColorModel

- file demo_lightblue_segmenter.cpp

- #include <trifinger_object_tracking/xgboost_classifier_single_color_rgb.h>#include <iostream>#include <opencv2/opencv.hpp>#include <string>

Functions

-

int main(int argc, char *argv[])

-

int main(int argc, char *argv[])

- file base_object_tracker_backend.hpp

- #include <atomic>#include <string>#include <thread>#include “object_pose.hpp”#include “object_tracker_data.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file base_object_tracker_backend.hpp

- #include <atomic>#include <string>#include <thread>#include “object_pose.hpp”#include “object_tracker_data.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file color_model.hpp

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file color_model.hpp

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file color_segmenter.hpp

- #include <math.h>#include <chrono>#include <future>#include <opencv2/opencv.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/types.hpp>

- file color_segmenter.hpp

- #include <math.h>#include <chrono>#include <future>#include <opencv2/opencv.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/types.hpp>

- file cube_detector.hpp

- #include <trifinger_object_tracking/color_segmenter.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/cv_sub_images.hpp>#include <trifinger_object_tracking/object_pose.hpp>#include <trifinger_object_tracking/pose_detector.hpp>#include <trifinger_object_tracking/scoped_timer.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file cube_detector.hpp

- #include <trifinger_object_tracking/color_segmenter.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/cv_sub_images.hpp>#include <trifinger_object_tracking/object_pose.hpp>#include <trifinger_object_tracking/pose_detector.hpp>#include <trifinger_object_tracking/scoped_timer.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file cube_model.hpp

- #include <array>#include <cstdint>#include <map>#include <memory>#include <stdexcept>#include <string>#include “color_model.hpp”

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file cube_model.hpp

- #include <array>#include <cstdint>#include <map>#include <memory>#include <stdexcept>#include <string>#include “color_model.hpp”

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file cube_visualizer.hpp

- #include <trifinger_cameras/parse_yml.h>#include <opencv2/opencv.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/object_pose.hpp>#include <trifinger_object_tracking/pose_detector.hpp>#include <trifinger_object_tracking/utils.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file cube_visualizer.hpp

- #include <trifinger_cameras/parse_yml.h>#include <opencv2/opencv.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/object_pose.hpp>#include <trifinger_object_tracking/pose_detector.hpp>#include <trifinger_object_tracking/utils.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file cv_sub_images.hpp

- #include <opencv2/opencv.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file cv_sub_images.hpp

- #include <opencv2/opencv.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file fake_object_tracker_backend.hpp

- #include “base_object_tracker_backend.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file fake_object_tracker_backend.hpp

- #include “base_object_tracker_backend.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file object_pose.hpp

- #include <Eigen/Eigen>#include <cereal/archives/json.hpp>#include <serialization_utils/cereal_eigen.hpp>

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file object_pose.hpp

- #include <Eigen/Eigen>#include <cereal/archives/json.hpp>#include <serialization_utils/cereal_eigen.hpp>

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file object_tracker_data.hpp

- #include <memory>#include <string>#include <time_series/multiprocess_time_series.hpp>#include “object_pose.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file object_tracker_data.hpp

- #include <memory>#include <string>#include <time_series/multiprocess_time_series.hpp>#include “object_pose.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file object_tracker_frontend.hpp

- #include <time_series/interface.hpp>#include “object_pose.hpp”#include “object_tracker_data.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file object_tracker_frontend.hpp

- #include <time_series/interface.hpp>#include “object_pose.hpp”#include “object_tracker_data.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file pose_detector.hpp

- #include <Eigen/Geometry>#include <opencv2/core/eigen.hpp>#include <opencv2/opencv.hpp>#include <trifinger_cameras/camera_parameters.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/types.hpp>#include <optim/optim.hpp>

- file pose_detector.hpp

- #include <Eigen/Geometry>#include <opencv2/core/eigen.hpp>#include <opencv2/opencv.hpp>#include <trifinger_cameras/camera_parameters.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/types.hpp>#include <optim/optim.hpp>

Defines

-

OPTIM_ENABLE_ARMA_WRAPPERS

-

OPTIM_DONT_USE_OPENMP

-

OPTIM_ENABLE_ARMA_WRAPPERS

- file pybullet_tricamera_object_tracker_driver.hpp

- #include <pybind11/embed.h>#include <pybind11/pybind11.h>#include <robot_interfaces/finger_types.hpp>#include <robot_interfaces/sensors/sensor_driver.hpp>#include <trifinger_cameras/camera_parameters.hpp>#include <trifinger_cameras/pybullet_tricamera_driver.hpp>#include <trifinger_object_tracking/tricamera_object_observation.hpp>

TriCameraObjectTrackerDriver for simulation (using rendered images).

cameras.

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file pybullet_tricamera_object_tracker_driver.hpp

- #include <pybind11/embed.h>#include <pybind11/pybind11.h>#include <robot_interfaces/finger_types.hpp>#include <robot_interfaces/sensors/sensor_driver.hpp>#include <trifinger_cameras/camera_parameters.hpp>#include <trifinger_cameras/pybullet_tricamera_driver.hpp>#include <trifinger_object_tracking/tricamera_object_observation.hpp>

TriCameraObjectTrackerDriver for simulation (using rendered images).

cameras.

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file scoped_timer.hpp

- #include <chrono>#include <iostream>#include <string>

- file scoped_timer.hpp

- #include <chrono>#include <iostream>#include <string>

- file simulation_object_tracker_backend.hpp

- #include <pybind11/pybind11.h>#include “base_object_tracker_backend.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file simulation_object_tracker_backend.hpp

- #include <pybind11/pybind11.h>#include “base_object_tracker_backend.hpp”

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file tricamera_object_observation.hpp

- #include <array>#include <trifinger_cameras/tricamera_observation.hpp>#include <trifinger_object_tracking/object_pose.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file tricamera_object_observation.hpp

- #include <array>#include <trifinger_cameras/tricamera_observation.hpp>#include <trifinger_object_tracking/object_pose.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file tricamera_object_tracking_driver.hpp

- #include <chrono>#include <filesystem>#include <robot_interfaces/sensors/sensor_driver.hpp>#include <trifinger_cameras/camera_parameters.hpp>#include <trifinger_cameras/pylon_driver.hpp>#include <trifinger_cameras/settings.hpp>#include <trifinger_cameras/tricamera_driver.hpp>#include <trifinger_object_tracking/cube_detector.hpp>#include <trifinger_object_tracking/tricamera_object_observation.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file tricamera_object_tracking_driver.hpp

- #include <chrono>#include <filesystem>#include <robot_interfaces/sensors/sensor_driver.hpp>#include <trifinger_cameras/camera_parameters.hpp>#include <trifinger_cameras/pylon_driver.hpp>#include <trifinger_cameras/settings.hpp>#include <trifinger_cameras/tricamera_driver.hpp>#include <trifinger_object_tracking/cube_detector.hpp>#include <trifinger_object_tracking/tricamera_object_observation.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file types.hpp

- #include <opencv2/opencv.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file types.hpp

- #include <opencv2/opencv.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file utils.hpp

- #include <trifinger_cameras/camera_parameters.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file utils.hpp

- #include <trifinger_cameras/camera_parameters.hpp>

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

- file xgboost_classifier_single_color_rgb.h

- #include <array>#include <opencv2/opencv.hpp>

- file xgboost_classifier_single_color_rgb.h

- #include <array>#include <opencv2/opencv.hpp>

- file py_lightblue_segmenter.cpp

- #include <pybind11/embed.h>#include <pybind11/pybind11.h>#include <pybind11/stl.h>#include <pybind11_opencv/cvbind.hpp>#include <trifinger_object_tracking/xgboost_classifier_single_color_rgb.h>

Python bindings for the lightblue colour segmentation.

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

Functions

-

PYBIND11_MODULE(py_lightblue_segmenter, m)

- file py_object_tracker.cpp

- #include <pybind11/eigen.h>#include <pybind11/embed.h>#include <pybind11/pybind11.h>#include <pybind11/stl_bind.h>#include <pybind11_opencv/cvbind.hpp>#include <trifinger_cameras/camera_parameters.hpp>#include <trifinger_object_tracking/cube_detector.hpp>#include <trifinger_object_tracking/cube_model.hpp>#include <trifinger_object_tracking/fake_object_tracker_backend.hpp>#include <trifinger_object_tracking/object_pose.hpp>#include <trifinger_object_tracking/object_tracker_data.hpp>#include <trifinger_object_tracking/object_tracker_frontend.hpp>#include <trifinger_object_tracking/simulation_object_tracker_backend.hpp>

Python bindings for the object tracker interface.

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

Functions

-

PYBIND11_MODULE(py_object_tracker, m)

- file py_tricamera_types.cpp

- #include <pybind11/eigen.h>#include <pybind11/embed.h>#include <pybind11/pybind11.h>#include <pybind11/stl.h>#include <pybind11/stl/filesystem.h>#include <pybind11/stl_bind.h>#include <pybind11_opencv/cvbind.hpp>#include <trifinger_object_tracking/pybullet_tricamera_object_tracker_driver.hpp>#include <robot_interfaces/sensors/pybind_sensors.hpp>#include <robot_interfaces/sensors/sensor_driver.hpp>#include <trifinger_object_tracking/cube_visualizer.hpp>

Create bindings for three pylon dependent camera sensors.

- License:

BSD 3-clause

- Copyright

2020, Max Planck Gesellschaft. All rights reserved.

Functions

-

PYBIND11_MODULE(py_tricamera_types, m)

- page License

- File color_model.hpp

BSD 3-clause

- File color_model.hpp

BSD 3-clause

- File cube_detector.hpp

BSD 3-clause

- File cube_detector.hpp

BSD 3-clause

- File cube_model.hpp

BSD 3-clause

- File cube_model.hpp

BSD 3-clause

- File cube_visualizer.hpp

BSD 3-clause

- File cube_visualizer.hpp

BSD 3-clause

- File cv_sub_images.hpp

BSD 3-clause

- File cv_sub_images.hpp

BSD 3-clause

- File py_tricamera_types.cpp

BSD 3-clause

- File tricamera_object_observation.hpp

BSD 3-clause

- File tricamera_object_observation.hpp

BSD 3-clause

- File tricamera_object_tracking_driver.hpp

BSD 3-clause

- File tricamera_object_tracking_driver.hpp

BSD 3-clause

- File types.hpp

BSD 3-clause

- File types.hpp

BSD 3-clause

- File utils.hpp

BSD 3-clause

- File utils.hpp

BSD 3-clause

- dir demos

- dir include

- dir install/trifinger_object_tracking/include

- dir install

- dir srcpy

- dir include/trifinger_object_tracking

- dir install/trifinger_object_tracking

- dir install/trifinger_object_tracking/include/trifinger_object_tracking